Thread

2011 — Software is eating the world.

2022 — AI is eating software.

The most interesting trends, papers, and observations you may have missed in AI for 2022.

(read on)

2022 — AI is eating software.

The most interesting trends, papers, and observations you may have missed in AI for 2022.

(read on)

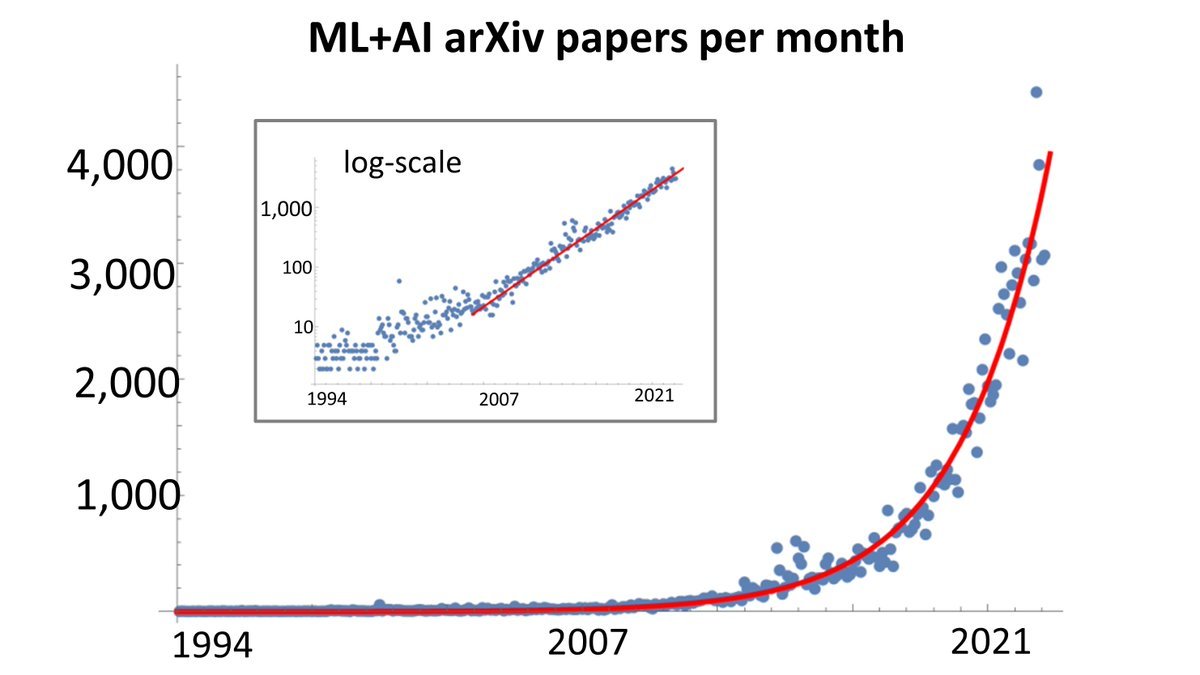

1) Papers released over time is accelerating.

This is the backdrop for the recent overwhelming amount of AI progress. Let's dig in.

This is the backdrop for the recent overwhelming amount of AI progress. Let's dig in.

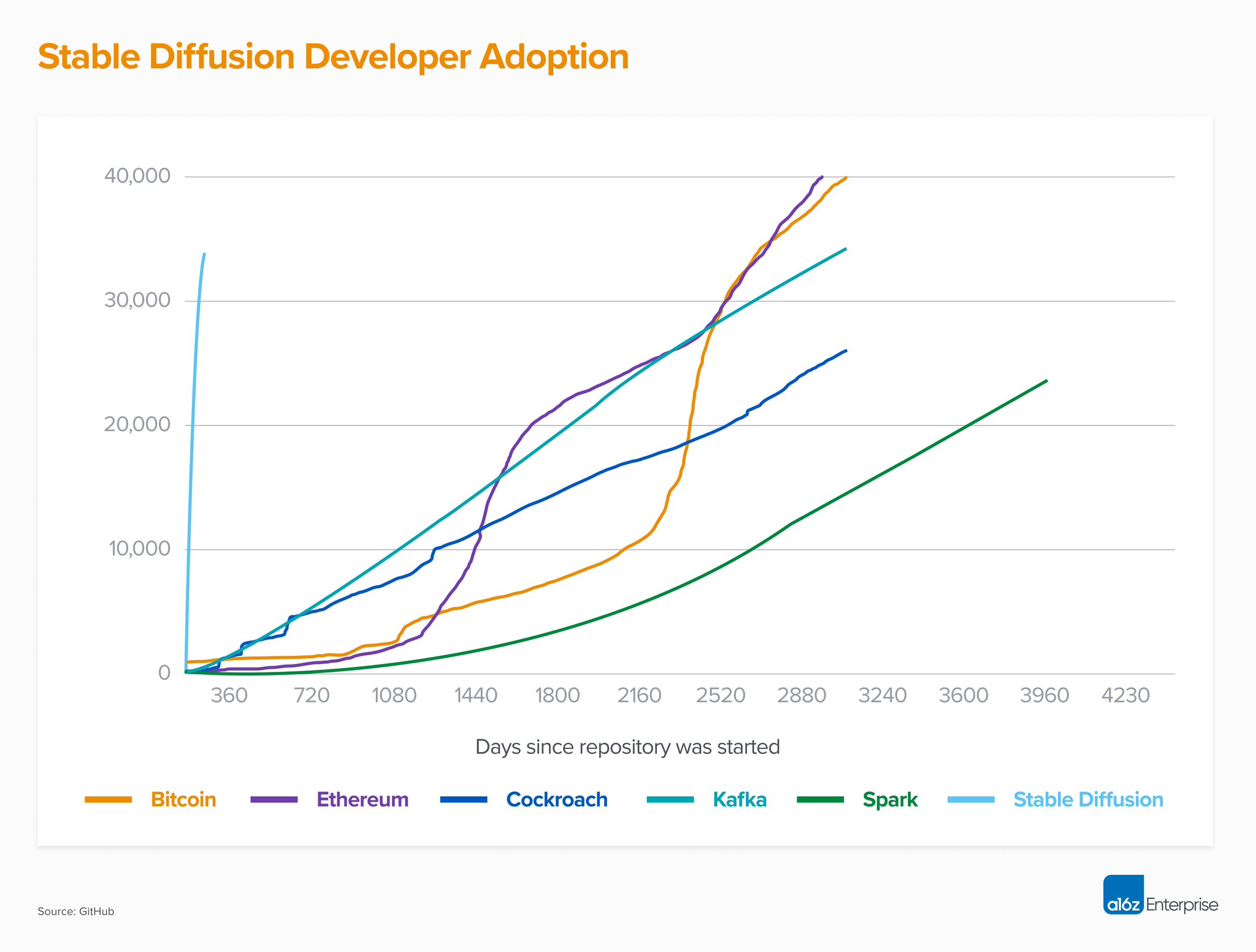

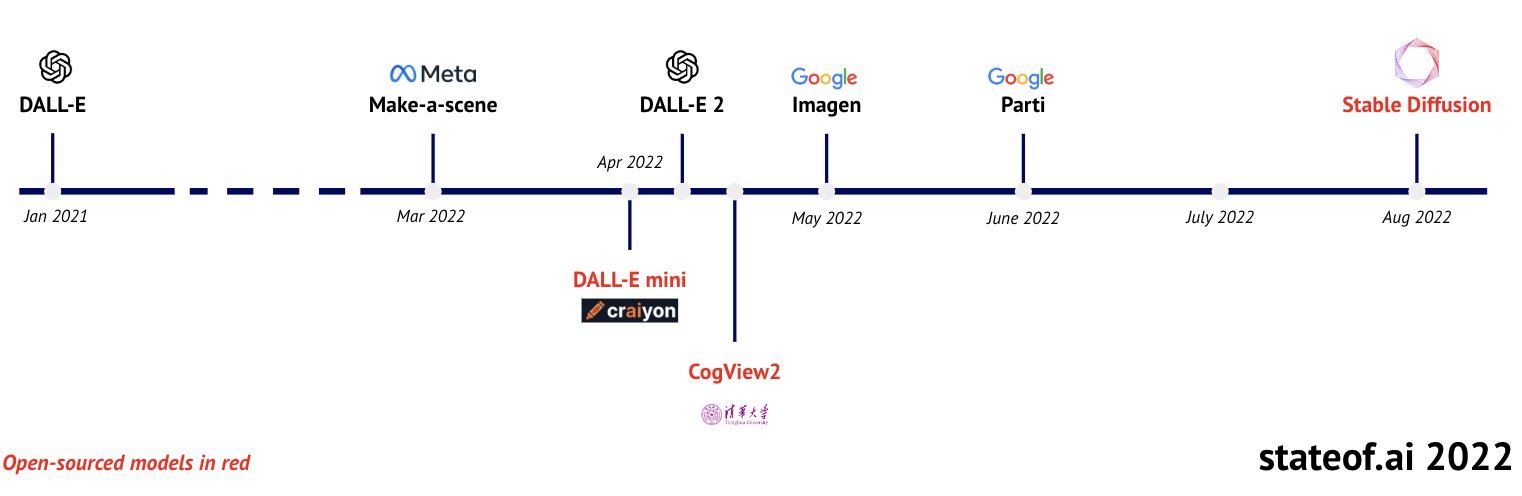

2) Foundation models captured the zeitgeist.

- Midjourney is the largest Discord ever.

- Stable Diffusion is the fastest company repo reach 35k stars ever.

- ChatGPT reached 1 million users in 5 days.

- Midjourney is the largest Discord ever.

- Stable Diffusion is the fastest company repo reach 35k stars ever.

- ChatGPT reached 1 million users in 5 days.

3) AI has begun improving itself.

1. AlphaTensor — Optimizes matrix multiplication tinyurl.com/yeyrakfp

2. PrefixRL — Optimizes circuit placement on chips arxiv.org/abs/2205.07000

3. LLMs can self improve — LLMs fine-tune on their own generations tinyurl.com/j7j298m7

1. AlphaTensor — Optimizes matrix multiplication tinyurl.com/yeyrakfp

2. PrefixRL — Optimizes circuit placement on chips arxiv.org/abs/2205.07000

3. LLMs can self improve — LLMs fine-tune on their own generations tinyurl.com/j7j298m7

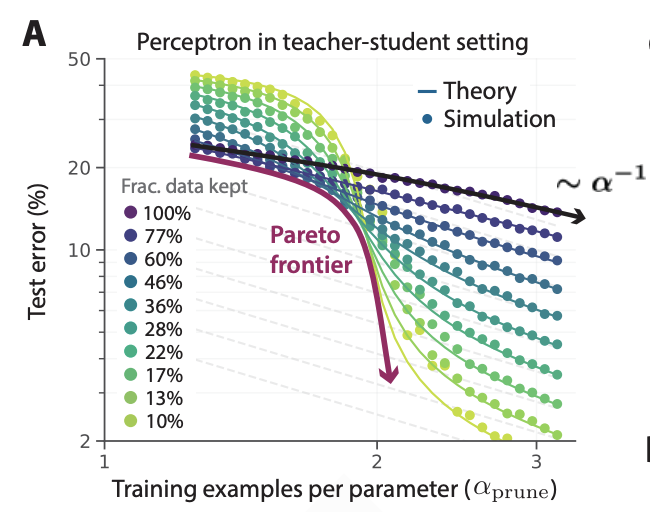

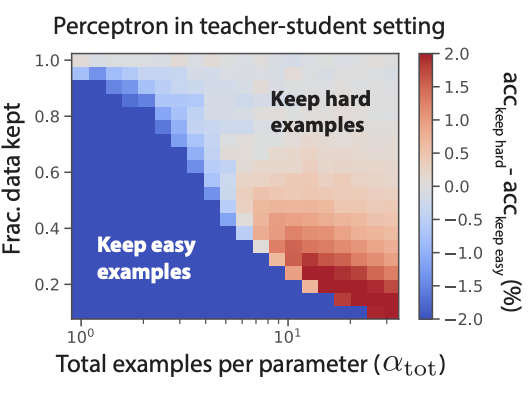

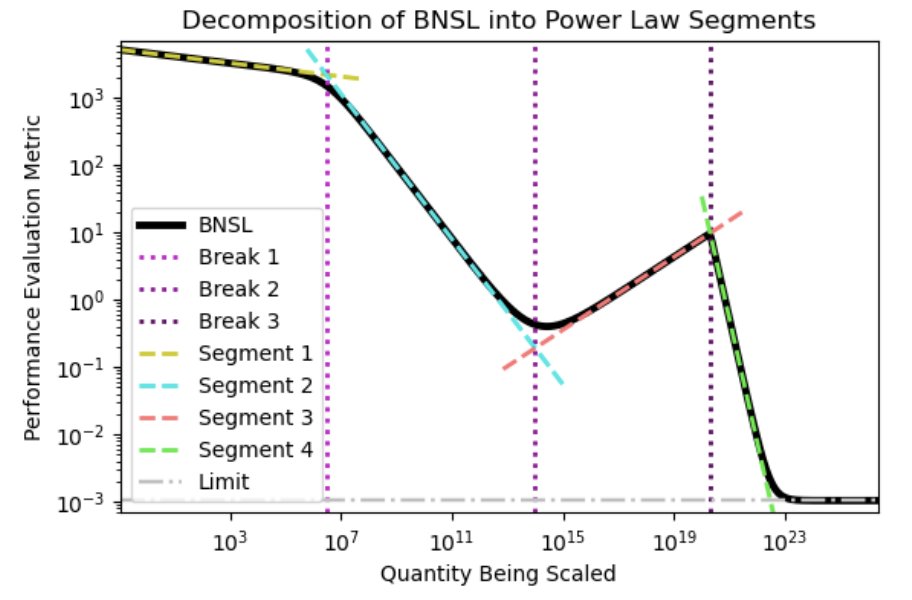

4) Scaling Laws Improve

Historically, AI has scaled through a power law. (IE log(scale) returns log(Y) benefit in accuracy)

Meta shows that through data-pruning (IE disregard useless examples), you can achieve exponential scaling (big deal!)

ai.facebook.com/research/publications/beyond-neural-scaling-laws-beating-power-law-scaling-via-data-p...

Historically, AI has scaled through a power law. (IE log(scale) returns log(Y) benefit in accuracy)

Meta shows that through data-pruning (IE disregard useless examples), you can achieve exponential scaling (big deal!)

ai.facebook.com/research/publications/beyond-neural-scaling-laws-beating-power-law-scaling-via-data-p...

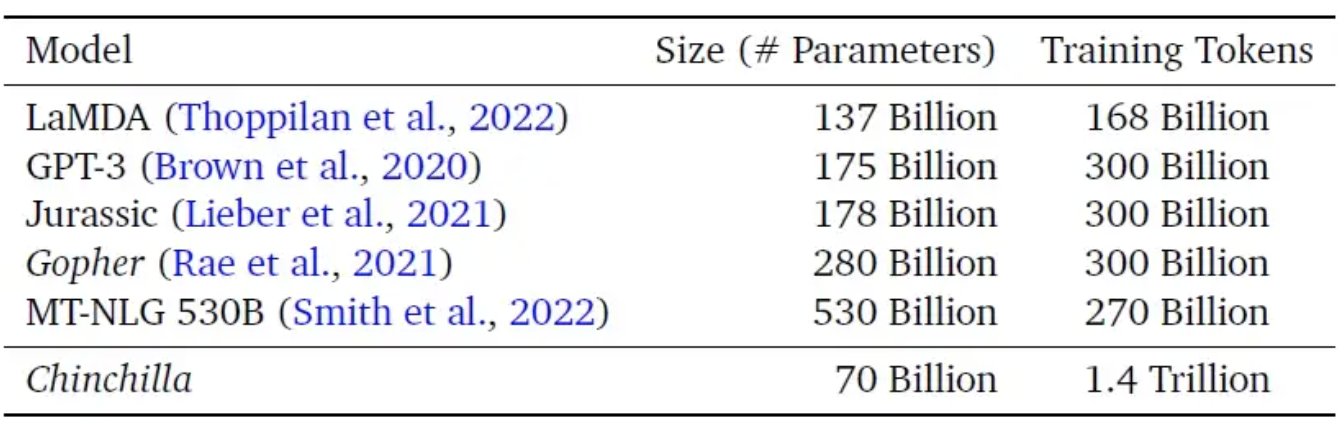

5) Data is the new bottleneck

Google compares two LLMs, where one has 4x less params but ~4x more data. The one with more data ends up significantly more accurate. Therefore for LLMs, compute is no longer the biggest lever, but rather data is.

arxiv.org/abs/2203.15556

Google compares two LLMs, where one has 4x less params but ~4x more data. The one with more data ends up significantly more accurate. Therefore for LLMs, compute is no longer the biggest lever, but rather data is.

arxiv.org/abs/2203.15556

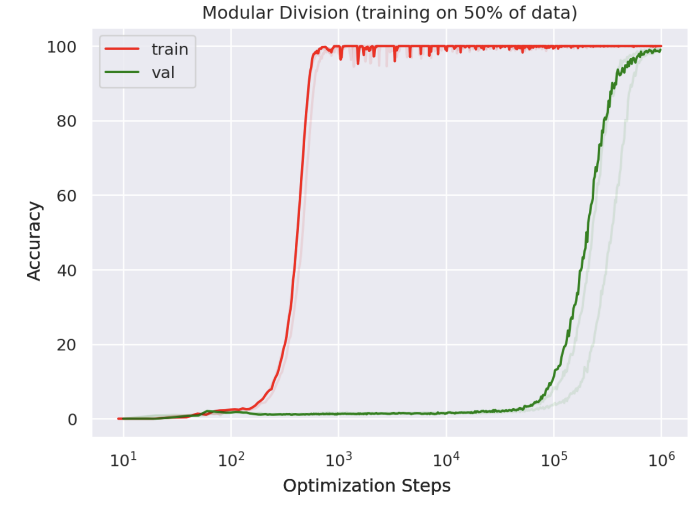

6) Overfitting be gone! 2.0

Grokking shows that at certain scale on small algorithmic datasets, AI transitions from fitting generalized curves to literally "grokking" the dataset generation rule. This makes them perfect predictors.

arxiv.org/pdf/2201.02177.pdf

Grokking shows that at certain scale on small algorithmic datasets, AI transitions from fitting generalized curves to literally "grokking" the dataset generation rule. This makes them perfect predictors.

arxiv.org/pdf/2201.02177.pdf

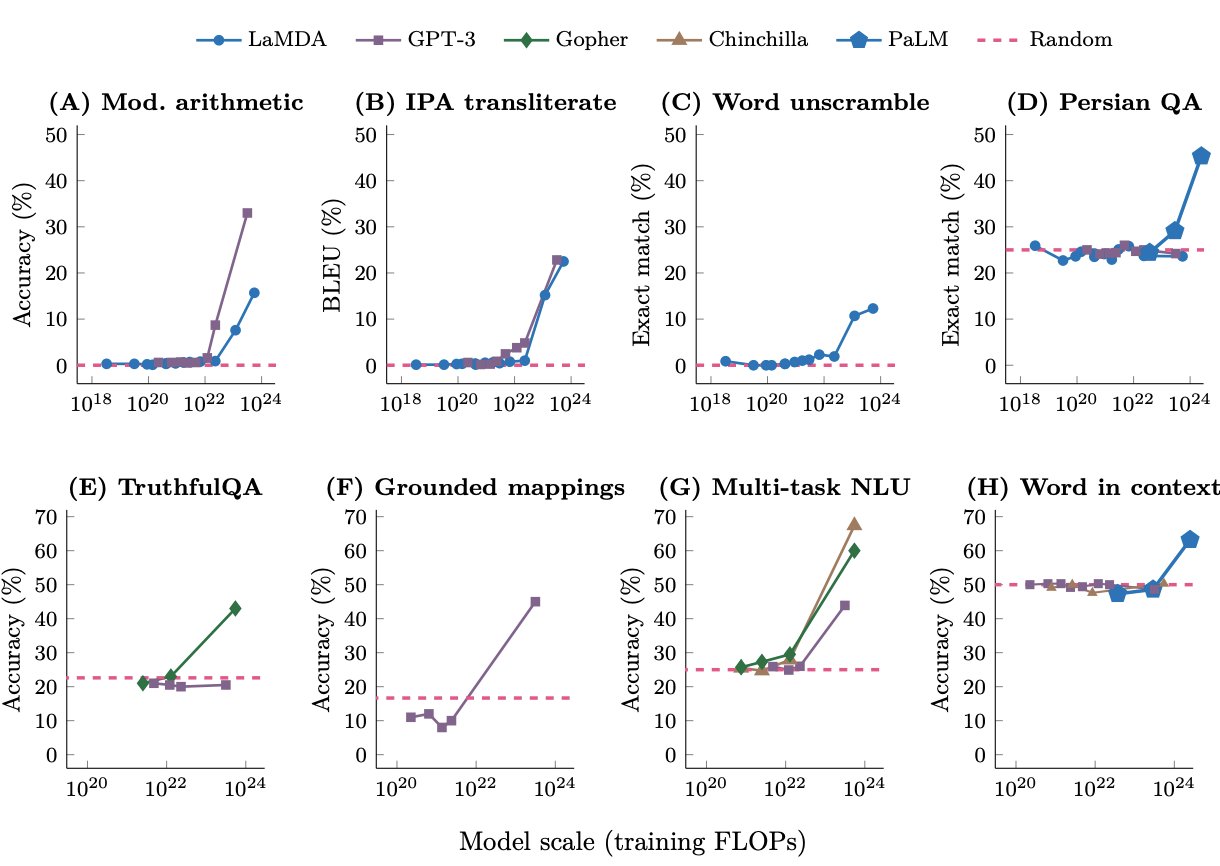

7) Learning capabilities can "emerge" within LLMs

After a certain scale, LLMs can go from slowly learning something to rapidly learning something. LLMs picking up on the underlying pattern is called emergence.

It applies to addition, QandA, and more

arxiv.org/pdf/2206.07682.pdf

After a certain scale, LLMs can go from slowly learning something to rapidly learning something. LLMs picking up on the underlying pattern is called emergence.

It applies to addition, QandA, and more

arxiv.org/pdf/2206.07682.pdf

8) AI only scales through power laws.

BNSL asserts that AI scales through a combination of power-laws with different slopes at different scales.

It accurately models double descent and helps explain phenomena like emergence or grokking @ethanCaballero

arxiv.org/pdf/2210.14891.pdf

BNSL asserts that AI scales through a combination of power-laws with different slopes at different scales.

It accurately models double descent and helps explain phenomena like emergence or grokking @ethanCaballero

arxiv.org/pdf/2210.14891.pdf

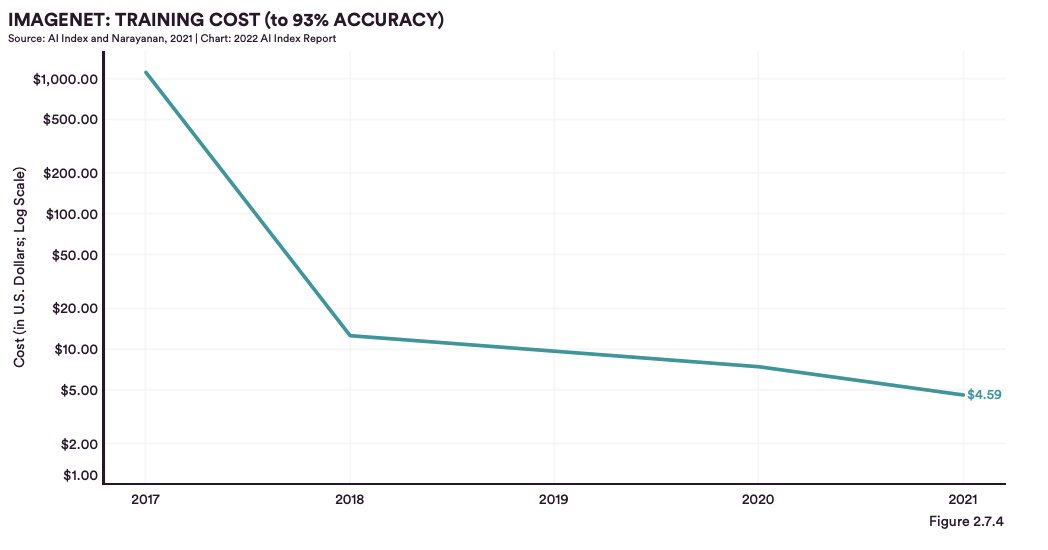

9) The cost to create performant AI is decreasing.

In the case of ImageNet, the cost to achieve 93% accuracy has halved in the past 2 years.

aiindex.stanford.edu/wp-content/uploads/2022/03/2022-AI-Index-Report_Master.pdf

In the case of ImageNet, the cost to achieve 93% accuracy has halved in the past 2 years.

aiindex.stanford.edu/wp-content/uploads/2022/03/2022-AI-Index-Report_Master.pdf

10) Coherence solved?

Dreambooth mitigates the coherence problem in image generation (consistent generations) by binding a unique identifier to a fine-tuned model.

This method powers the AI avatar creators, game asset generators, AI movies, and more.

dreambooth.github.io/

Dreambooth mitigates the coherence problem in image generation (consistent generations) by binding a unique identifier to a fine-tuned model.

This method powers the AI avatar creators, game asset generators, AI movies, and more.

dreambooth.github.io/

11) Diplomacy cracked.

In Diplomacy, you try to conquer Europe against 6 other players/countries. It is a game of cooperation, negotiation, and manipulation.

CICERO achieves human level performance by tying strategic reasoning to natural language.

tinyurl.com/4dhmx37u

In Diplomacy, you try to conquer Europe against 6 other players/countries. It is a game of cooperation, negotiation, and manipulation.

CICERO achieves human level performance by tying strategic reasoning to natural language.

tinyurl.com/4dhmx37u

12) AI Accelerating Fusion

DeepMind was able to control inherently unstable plasma by using AI to coordinate a tokamak's many magnetic coils. It can shape + control plasma in new ways, and it's a huge unlock for fusion.

www.deepmind.com/blog/accelerating-fusion-science-through-learned-plasma-control

DeepMind was able to control inherently unstable plasma by using AI to coordinate a tokamak's many magnetic coils. It can shape + control plasma in new ways, and it's a huge unlock for fusion.

www.deepmind.com/blog/accelerating-fusion-science-through-learned-plasma-control

13) Bridging domains for more data

To create a labeled Minecraft dataset, OpenAI used inverse dynamics on Minecraft videos to find the user's actions.

This data collection across domains (video<>3d) will become more common as data becomes more valuable

openai.com/blog/vpt/

To create a labeled Minecraft dataset, OpenAI used inverse dynamics on Minecraft videos to find the user's actions.

This data collection across domains (video<>3d) will become more common as data becomes more valuable

openai.com/blog/vpt/

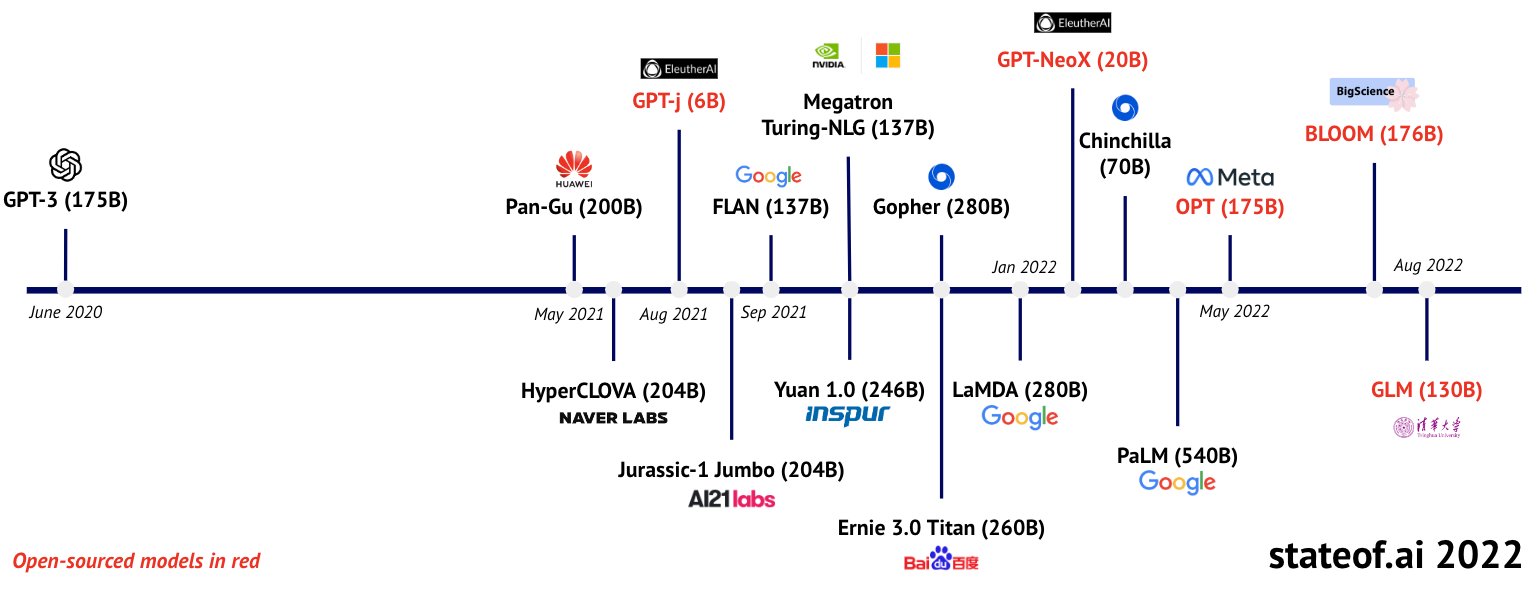

14) The foundation model land grab

After the explosion of LLMs and text-to-image models, there's been a race to be the first mover in new domains:

- Audio (Whisper, Harmonai),

- Video (Make-a-Video, Imagen Video)

- 3d (Dreamfusion, Magic3d)

After the explosion of LLMs and text-to-image models, there's been a race to be the first mover in new domains:

- Audio (Whisper, Harmonai),

- Video (Make-a-Video, Imagen Video)

- 3d (Dreamfusion, Magic3d)

15) The democratization of AI continues.

It took 1 year for GPT-3 to see open source LLM alternatives

It took 1 month for DALL-E 2 to see the same

tinyurl.com/4phsn266

It took 1 year for GPT-3 to see open source LLM alternatives

It took 1 month for DALL-E 2 to see the same

tinyurl.com/4phsn266

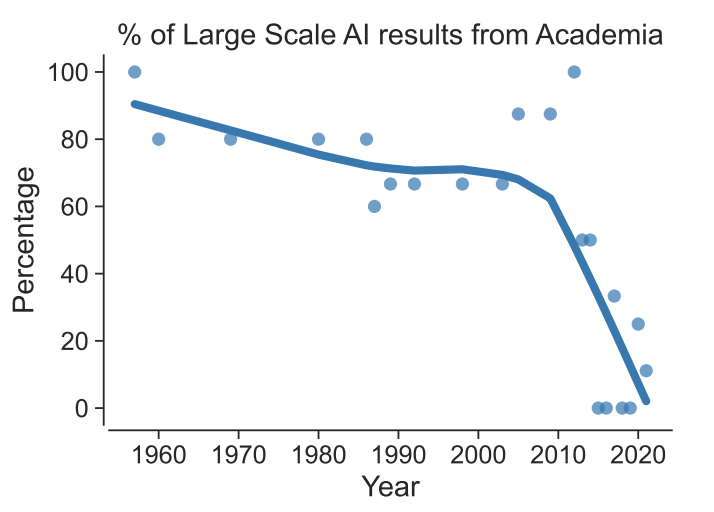

16) Academia accounts for a decreasing proportion of large scale AI papers.

AI used to be siloed within large research institutions, then big tech, and now is accessible to all via APIs and open source.

tinyurl.com/2smh6tkm

AI used to be siloed within large research institutions, then big tech, and now is accessible to all via APIs and open source.

tinyurl.com/2smh6tkm

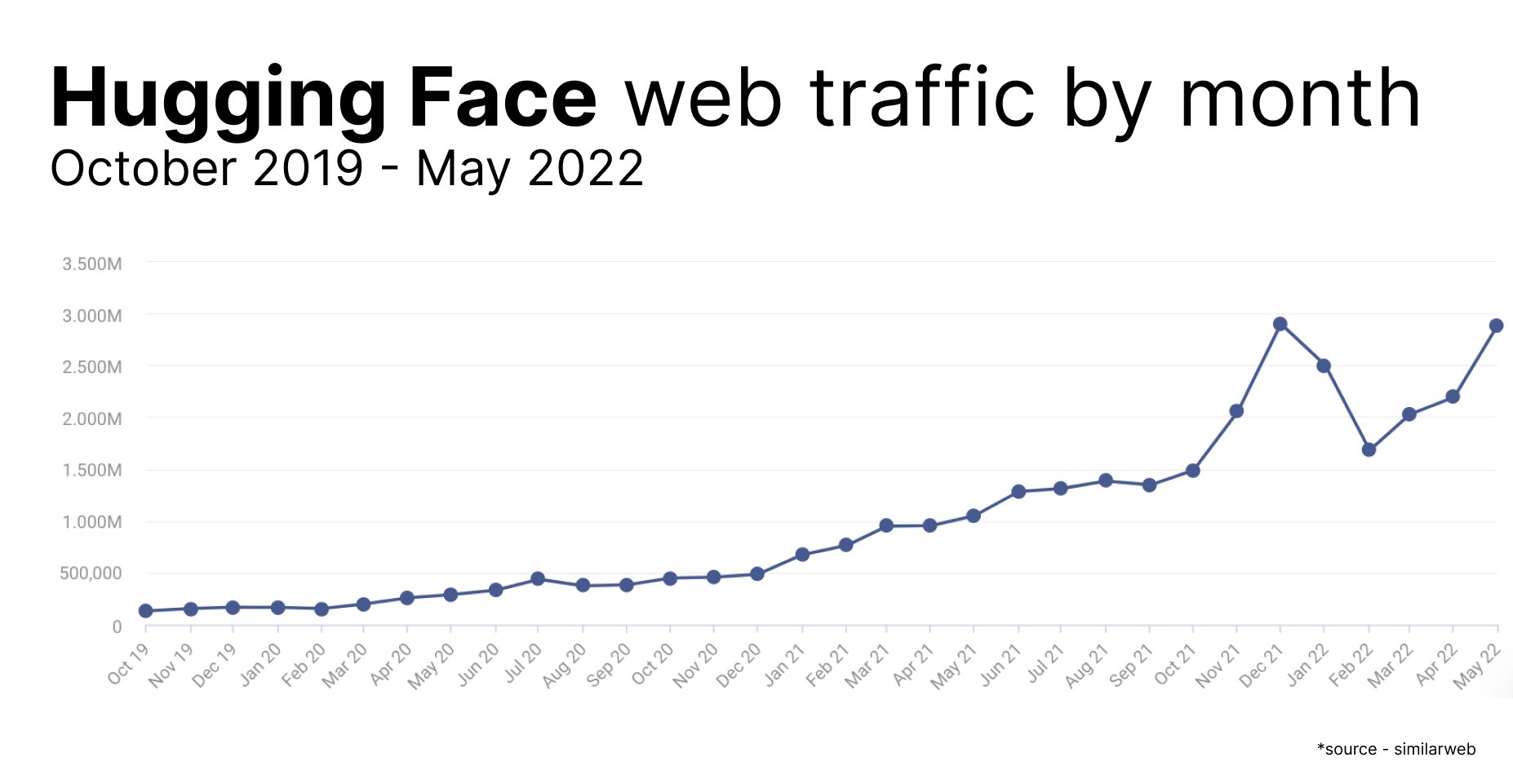

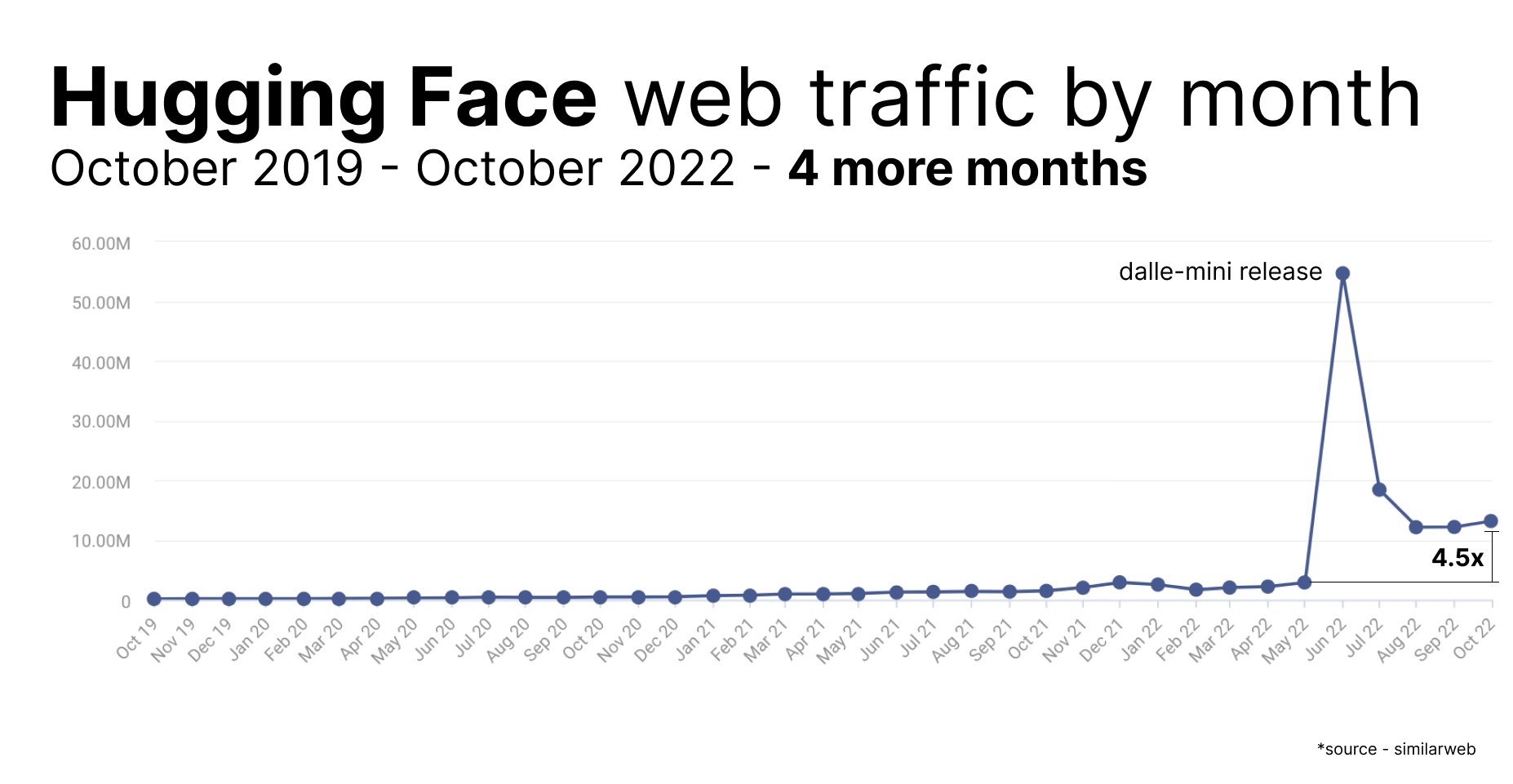

17) Horizontal model aggregators + tools have been growing

Two examples of this are Huggingface and Replicate. Huggingface, for example, had a "gradually, then suddenly" moment, where they grew pretty consistently until the DALL-E mini release, when usage went up 4.5x overnight.

Two examples of this are Huggingface and Replicate. Huggingface, for example, had a "gradually, then suddenly" moment, where they grew pretty consistently until the DALL-E mini release, when usage went up 4.5x overnight.

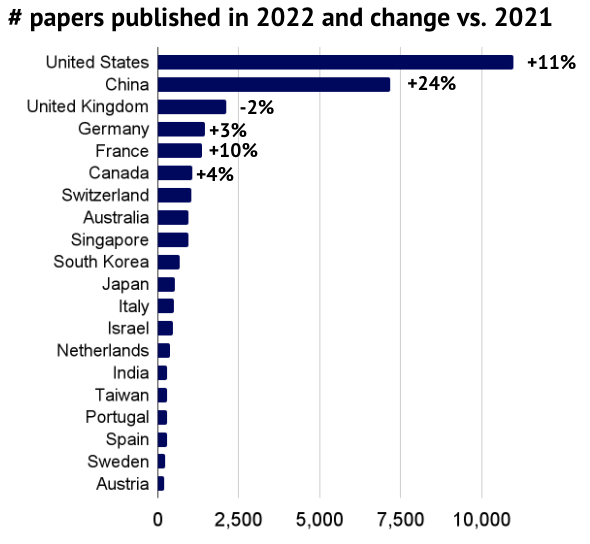

18) The US vs China "Latent Space Race" is heating up

The US is releasing the most AI research with China #2, but China's rate of growth is faster.

tinyurl.com/2jnuu6t9

The US is releasing the most AI research with China #2, but China's rate of growth is faster.

tinyurl.com/2jnuu6t9

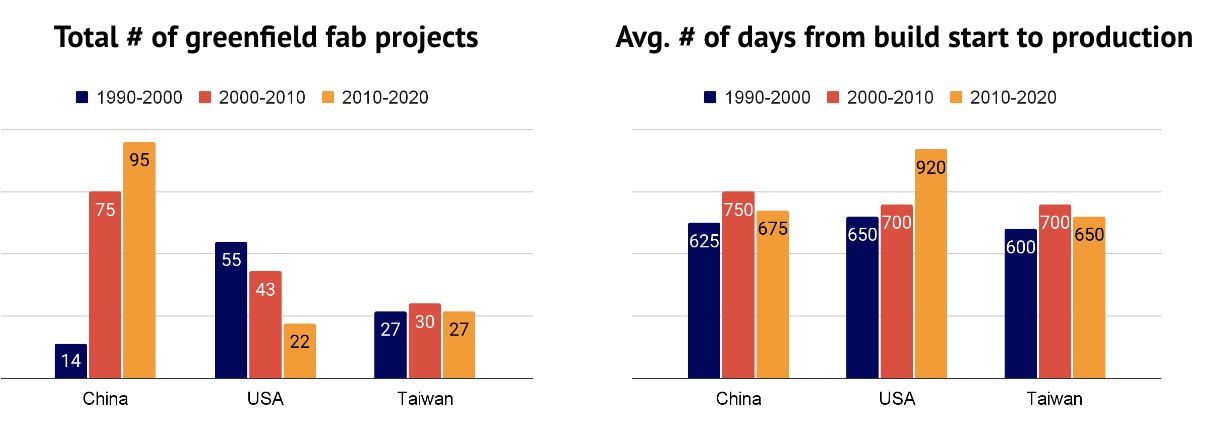

19) The US is lagging behind China in new chip projects and the speed to production.

This is important as all models scale alongside compute.

The US recently passed a $250b in semiconductor R&D + production to hopefully soften this disparity.

tinyurl.com/24rpw24r

This is important as all models scale alongside compute.

The US recently passed a $250b in semiconductor R&D + production to hopefully soften this disparity.

tinyurl.com/24rpw24r

20) US banned NVIDIA and AMD from exporting their best GPUs to China.

This will hamper China AI R&D at least temporarily. The question is how long it will take for China to build their own similar quality GPUs (which is trade secret and conceivably very... very... hard to do)

This will hamper China AI R&D at least temporarily. The question is how long it will take for China to build their own similar quality GPUs (which is trade secret and conceivably very... very... hard to do)

That's all — huge s/o to @ykilcher for ML News and Paper Reviews, @soundboy and @nathanbenaich for their 2022 State of AI Report, and Stanford for their 2022 AI Index.

Yannic YT — @ykilcher' target='_blank'>www.youtube.com/@ykilcher

State of AI — tinyurl.com/2fs6jubf

AI Index — hai.stanford.edu/research/ai-index-2022

Yannic YT — @ykilcher' target='_blank'>www.youtube.com/@ykilcher

State of AI — tinyurl.com/2fs6jubf

AI Index — hai.stanford.edu/research/ai-index-2022

All considered, the key trends I'm following in AI are:

1. Acceleration

2. The Democratization of AI

3. AI Improving Itself

4. US vs China — The latent space race

5. Software simulators to solve real world problems

6. Bridging domains — ie turning video data into 3d data

1. Acceleration

2. The Democratization of AI

3. AI Improving Itself

4. US vs China — The latent space race

5. Software simulators to solve real world problems

6. Bridging domains — ie turning video data into 3d data

Here at @a16z GAMES FUND ONE - we see Games as one of the first verticals disrupted by Generative AI.

Read our thinking here: a16z.com/2022/11/17/the-generative-ai-revolution-in-games/

And follow me, @gwertz, and @tkexpress11 for more in AI

Read our thinking here: a16z.com/2022/11/17/the-generative-ai-revolution-in-games/

And follow me, @gwertz, and @tkexpress11 for more in AI